Posts

Stream video with frame-level metadata via WebRTC using GStreamer

Christoph RustPosted on February 7, 2025

Introduction

One of our customers was facing the challenge of streaming out live image data to client applications connected over a network. As a core requirement, frame-level meta data had to be included in the stream (i.e., meta data and the video frame have to be in sync all the time) while the stream should be available in a standard format such that different clients (browser-based, stand-alone applications) can play the video and read the meta data. We did not want to invent our own protocol but use existing protocols/frameworks as much as possible.

WebRTC seems to be the de-facto standard

framework for low-latency media streaming over network these days and is

natively supported by all major browsers. WebRTC as a standard requires

browsers to support only a few video codecs, VP8 and H264, and it was not

immediately clear how we could embed the meta data into the stream. WebRTC

data channels were not an option as they require additional logic to get

meta-data packages and image frames in sync. Fortunately, the H26x codec

standard supports to transport arbitrary payloads per video frame in a special

payload as NAL unit of type 6 (SEI Unregistered User Data), as described in

the

Annex D of the H264 format specification standard

).

Implementation-wise, we had a strong preference for using Rust which is well

known for its excellent performance (en par with C/C++), its unique security

features, and awesome tooling. Moreover, the widely used multimedia framework

GStreamer offers Rust bindings to integrate well with the Rust ecosystem. Many

plugins included with the official installer are already written in Rust.

Luckily, one of them is an

all-batteries included WebRTC

plugin.

Therefore, the main challenge left to us was getting the meta data into the

encoded video stream.

The remainder of this blogpost will give a brief overview of the whole framework

but its focus, however, will be more technical and address the challenge of

leveraging GStreamer to send frame-level meta data in an encoded video stream

and access them client-side. For that reason, this blog post also demonstrates

how to play the stream and read meta data in a Typescript/React frontend client.

This article assumes some familiarity with GStreamer. Readers should have some

basic familiarity with GStreamer terminology (e.g. Pipeline, Element,

Buffer). However, we try to make the content as accessible as possible.

Framework overview

WebRTC is a collection of protocols and interfaces, enabling real-time media

streaming over a network like the internet. It is well-integrated in modern

browsers and ubiquitous these days in video-conferencing software like Microsoft

Teams, Jitsi, Google Meet, you name it.

Under the hood, peers can send and receive media streams (video, audio, arbitrary data) directly to and from other peers while negotiation is done via a central signaling server. We refer the interested reader to the well-written book WebRTC for the Curious.

The aforementioned webrtc plugin for GStreamer abstracts away most of the

complexity related to WebRTC. The included element webrtcsink as of version

0.14 even ships with its own signaling server. Moreover, the persons behind it

created a JavaScript library which can be used as a browser counterpart for the

GStreamer pipeline elements.

In our use case, one application was responsible for receiving the video stream,

processing it in a GStreamer pipeline which terminated by a webrtcsink

element (producer side). A counterpart application (consumer side) would receive

the stream and perform some further business logic on it, also using a

GStreamer pipeline. A third, user-facing browser-application also should play

the video stream.

Frame-level meta data

GStreamer internally supports carrying arbitrary meta data together with a

buffer while it flows through a streaming pipeline in a GstMeta object.

However, to have the meta data included in the H26x-encoded bit stream, the

payload has to be appended to the buffer memory, formatted as SEI Unregistered User Data payload. Fortunately, the

GStreamer Video

Library

already supports converting SEI Unregistered User Data payloads as GstMeta

objects to buffers which can be converted to a properly formatted GstMemory

(via

gst_h264_create_sei_memory_avc

from the codecparser library, available since GStreamer version 1.24.0).

Such a GstMemory can then be appended to the encoded buffer’s memory.

To leverage these functionalities, we decided to implement a custom GStreamer

plugin in Rust.

Implementation

GStreamer already allows to attach arbitrary metadata to a media buffer as

GstMeta objects and since version 1.22 there is also a special variant

GstVideoSEIUserDataUnregisteredMeta targeting the H264 codec’s facility to

transport arbitrary frame-level payloads. Such

GstVideoSEIUserDataUnregisteredMeta objects can be attached to a video buffer

in any place of a GStreamer pipeline and will be kept together with the buffer

as it flows through the pipeline. However, GstMeta objects are internal to

GStreamer and are not added to the buffer’s memory region such that when

leaving the pipeline (e.g. via filesink), data held in the meta object is no

longer available.

To make the meta data available after an encoded video buffer has left the

pipeline, it has to be added as a properly formatted GstMemory object to the

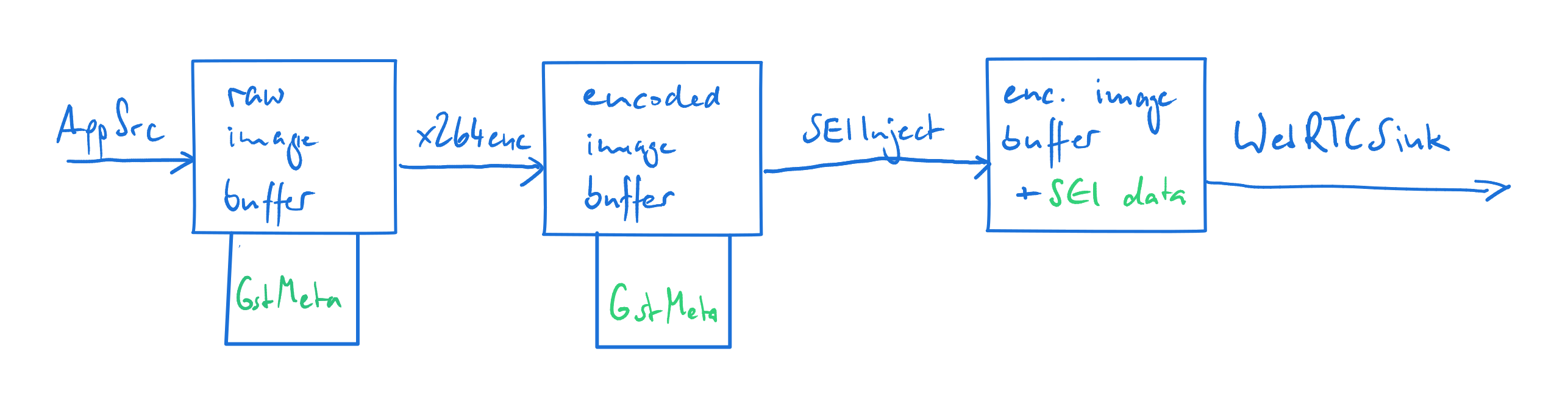

buffer’s memory. This is the role of a pipeline element named SEIInject that

has to be placed after an encodebin element. A producer pipeline could look as

follows

where instead of piping into a webrtcsink element, the H264-encoded stream can

likewise be sent to other compatible elements (filesink, matroskamux,…).

The resulting binary representation of the video stream then contains

frame-level meta data as SEI Unregistered User Data.

GStreamer plugin for injecting/extracting SEI User Data to/from an H26x-encoded stream

Developing a GStreamer plugin involves writing quite a lot

boilerplate

code,

which is also true for plugins written in Rust. There is a

neat

tutorial

on this topic which is definitely worth reading.

Our plugin will act on an encoded buffer as follows:

- check if there are

GstMetaof the kindGstVideoSEIUserDataUnregisteredMetaavailable. - if this is the case, modify the buffer’s memory by appending a properly

formatted

GstMemoryobject.

The right base class to derive from is GstBaseTransform and our main logic

will live in its transform method.

Interfacing the codecparser library

However, since as of this writing for the codecparser library no Rust bindings

have been published (probably due to the fact its API is yet considered

unstable), we somehow have to make the functionality of

gst_h264_create_sei_memory_avc available in Rust. Instead of creating bindings

for the whole codecparser library, we decided to write a custom wrapper in C for

which we then create Rust bindings, exposing only what is necessary for our use

case.

This wrapper defines a struct that holds the data for the SEI payload and a

function creating a GstMemory from it. Our wrapper struct also slightly

differs from the original GstH264UserDataUnregistered in terms of member types

by using standard C types instead of the glib aliases:

#ifndef GST_SEI_WRAPPER_H_

#define GST_SEI_WRAPPER_H_

#include "gst/gstmemory.h"

/**

* GstH264UserDataUnregisteredWrapper:

*

* A wrapper struct around `GstH264UserDataUnregistered`. Wrapper is introduced

* to reduce the number of types exposed in this header.

*/

typedef struct _GstH264UserDataUnregisteredWrapper {

unsigned char uuid[16];

const unsigned char *data;

int size;

} GstH264UserDataUnregisteredWrapper;

/**

* gst_h264_create_sei_memory_wrapper:

* @urud: A Wrapper struct for `GstH264UserDataUnregistered`, containing

* a `uuid` and a byte array containing the data to be added to the bitstream.

*

* Returns: A pointer to GstMemory which can be appended to the memories of a

* `GstBuffer`.

*

* */

GstMemory *

gst_h264_create_sei_memory_wrapper(GstH264UserDataUnregisteredWrapper * unregistered_user_data);

#endif // GST_SEI_WRAPPER_H_

In the implementation of gst_h264_create_sei_memory_wrapper takes care of

assembling a GArray and calls gst_h264_create_sei_memory_avc, returning the

address of the resulting GstMemory to its caller.

Rust bindings can be easily generated using

bindgen:

bindgen --allowlist-function="gst_h264_create_sei_memory_wrapper" \

--allowlist-type="GstH264UserDataUnregisteredWrapper" \

-o bindings.rs gst_sei_wrapper.h -- `(pkg-config gstreamer-plugins-bad-1.0 --cflags)`GStreamer plugin implementation

Let us now turn to the actual plugin implementation. For the sake of brevity, we will not go through all the plugin boilerplate code (define Rust wrapper types, registration,…), but focus only on the relevant adjustments specific to our plugin.

Our main type is an empty struct called SEIInject.

#[derive(Default)]

pub struct SEIInject {}To get the correct behaviour of our plugin, we have to implement the traits

ObjectImpl, ObjectSubclass, GstObjectImpl, ElementImpl,

BaseTransformImpl, and VideoFilterImpl. The implementations of

ObjectImpl, ObjectSubclass, GstObjectImpl, and VideoFilterImpl are

either empty or not specific to our plugin and are therefore omitted.

The first adjustments specific to our plugin specifies how our plugin interacts

with other pipeline elements in terms of exposed Pads.

impl ElementImpl for SEIInject {

fn metadata() -> Option<&'static gst::subclass::ElementMetadata> {

static ELEMENT_METADATA: LazyLock<gst::subclass::ElementMetadata> = LazyLock::new(|| {

gst::subclass::ElementMetadata::new(

"SEI Injector for H264/H265 video",

"Filter/Video",

"Inject SEI unregistered user data into the h264/h265 bitstream.",

"Christoph Rust",

)

});

Some(&*ELEMENT_METADATA)

}

fn pad_templates() -> &'static [gst::PadTemplate] {

static PAD_TEMPLATES: LazyLock<Vec<gst::PadTemplate>> = LazyLock::new(|| {

let mut caps =

gst::Caps::from_str("video/x-h264,stream-format=avc,alignment=au").unwrap();

let h265_caps =

gst::Caps::from_str("video/x-h265,stream-format=avc,alignment=au").unwrap();

caps.merge(h265_caps);

let src_pad_template = gst::PadTemplate::new(

"src",

gst::PadDirection::Src,

gst::PadPresence::Always,

&caps,

)

.unwrap();

let sink_pad_template = gst::PadTemplate::new(

"sink",

gst::PadDirection::Sink,

gst::PadPresence::Always,

&caps,

)

.unwrap();

vec![src_pad_template, sink_pad_template]

});

PAD_TEMPLATES.as_ref()

}

}By setting these Caps, only compatible elements can be linked to our plugin,

namely those who can send/receive H264/H265-encoded video in the

packet-transport-format (avc) and au-aligned (each buffer holds a whole

access unit).

The main logic of our plugin is contained in the implementation of

BaseTransformImpl which defines what Caps we will expose on the src for

Caps negotiated on our sink side and vice-versa. In our case this is pretty

simple as src and sink caps are always identical.

impl BaseTransformImpl for SEIInject {

const MODE: gst_base::subclass::BaseTransformMode =

gst_base::subclass::BaseTransformMode::NeverInPlace;

const PASSTHROUGH_ON_SAME_CAPS: bool = false;

const TRANSFORM_IP_ON_PASSTHROUGH: bool = false;

fn transform_caps(

&self,

direction: gst::PadDirection,

caps: &gst::Caps,

filter: Option<&gst::Caps>,

) -> Option<gst::Caps> {

let other_caps = caps.clone();

gst::debug!(

CAT,

imp: self,

"Transformed caps from {} to {} in direction {:?}",

caps,

other_caps,

direction

);

if let Some(filter) = filter {

Some(filter.intersect_with_mode(&other_caps, gst::CapsIntersectMode::First))

} else {

Some(other_caps)

}

}

}With the transform method of the same impl block, we finally define how to

append the SEI payload to the buffer’s memory. Actually, by adding additional

data to the buffers memory, it is no longer possible to modify the existing

buffer in-place. Instead, we have to copy the incoming buffer to a new buffer

(outbuf) and append the formatted SEI payload.

impl BaseTransformImpl for SEIInject {

// ...

fn transform(

&self,

inbuf: &gst::Buffer,

outbuf: &mut gst::BufferRef,

) -> Result<gst::FlowSuccess, gst::FlowError> {

inbuf

.copy_into(outbuf, BufferCopyFlags::MEMORY, 0..)

.map_err(|_| gst::FlowError::Error)?;

SEIUserData::iter_from_gst_buf_meta(inbuf).for_each(|sei_user_data| {

let mem = create_sei_memory(&sei_user_data);

outbuf.append_memory(mem);

});

Ok(gst::FlowSuccess::Ok)

}

}Here we use another custom type SEIUserData which holds the actual SEI data

(uuid and a data payload) with some methods to extract it from and append it to

a buffer’s GstMeta objects plus providing conversions to and from

GstH264UserDataUnregisteredWrapper and a function create_sei_memory

performing the FFI call to gst_h264_create_sei_memory_wrapper.

Plugin usage

Produce encoded video with meta data

Once our plugin has compiled, we can use it either in an ad-hoc pipeline from

the terminal or in a program. GStreamer, however, has to find the dynamic

library of our plugin and the compiled artifact either has to be placed

somewhere in the PATH or the environment variable GST_PLUGIN_PATH has to

point to its file system location. Alternatively, the actual plugin code can

also be used as a Rust crate and the plugin code will be linked statically into

the executable.

The main business there is to create a pipeline like (note the position of our

element rsseiinject after the encodebin element!):

appsrc ! encodebin ! rsseiinject ! appsinkwere a GstMeta object is appended to each buffer before it is sent into the

pipeline via AppSrc’s push_buffer method:

// we can later identify our payload receiver side based on this uuid

let mut buffer: gstreamer::Buffer = {

// ...

};

let uuid = hex::decode("48656c6c6f20776f726c642164214865")

.unwrap()

.try_into()

.unwrap();

let payload: Vec<u8> = {

// ...

};

let user_data_payload = SEIUserData::new(uuid, payload);

// append sei payload as meta object to the buffer

user_data.append_to_gst_buf(&mut buffer);

// push buffer into the pipeline

app_src.push_buffer(buffer).unwrap();The pipeline element rsseiinject will take care of putting our payload into

the actual encoded bit stream and we are done for the producer side.

To make this work in a WebRTC framework, replace the appsink element above by

webrtcsink. As another sensible solution one can also let webrtcsink configure

the encodebin element and place rsseiinject between encoder and payloader

via the request-encoded-filter signal.

Read meta data from encoded video

Getting the payload back from the encoded stream in a receiver pipeline is much

more simple since h264parse almost does the reverse of our plugin

rsseiinject, placing the SEI message into a GstMeta object. Everything we

have to do is to call the method SEIUserData::iter_from_gst_buf_meta on a

parsed buffer and filter for our uuid:

let uuid = hex::decode("48656c6c6f20776f726c642164214865")

.unwrap()

.try_into()

.unwrap();

SEIUserData::iter_from_gst_buf_meta(&buffer)

.filter(|sei_user_data| sei_user_data.uuid_ref() == &uuid)

.for_each(|sei_user_data| {

// ...

});Read meta data in a web browser

In our application we also have to read the meta data contained in the encoded

video stream client side, meaning we have to get access to the encoded buffer in

a web browser and parse the SEI message from there. Unfortunately, the browser

world is not very homogeneous but the approach we present here should work for

Firefox >= 117, Safari >= 15.4 and browsers based on Chrome >= 94.

Fortunately, there is the JS library

nal-extractor which takes away all

the hassles with different browser APIs and provides all the necessary

functionalities to extract SEI messages.

All we have to do is to create a WebWorker script which is taking care of

extracting the SEI message from each encoded buffer and post the decoded payload

back to the main browser context.

// SEIWebWorker.ts

import { SEIExtractor, SEIMessageType, startRtpScriptTransformService } from "nal-extractor";

startRtpScriptTransformService((transformer, options: Any) => {

console.log("Received a transformer for options:", options);

const transform = new TransformStream({

start() {},

async transform(encodedFrame, controller) {

const view = new DataView(encodedFrame.data);

const buffer = new Uint8Array(view.buffer);

const extractor = new SEIExtractor();

try {

let SEIMessages = extractor.processAU(buffer);

if (SEIMessages.length) {

SEIMessages.forEach((message) => {

if (message.type === SEIMessageType.USER_DATA_UNREGISTERED &&

message.uuid === BigInt(`0x48656c6c6f20776f726c642164214865`)) {

let seiMessage = {

// decode payload contained in message.data

};

postMessage(seiMessage);

}

});

}

} catch (e) {

console.log("Webworker parsing-error:", e);

}

controller.enqueue(encodedFrame);

},

});

transformer.readable.pipeThrough(transform).pipeTo(transformer.writable);

})In the main browser context, we have to create the webworker, define the

onmessage callback function and attach the correct transceiver to our

webworker.

vitejs

makes the creation of a web worker particularly easy

// main browser context

import SEIWorker from "./SEIWebWorker?worker";

SEIWorker = new SEIWorker();

SEIWorker.onmessage = (ev) => {

console.log("Received SEI message: ", ev.data);

};Finally, to attach our stream transceiver to our webworker, we have to call

attachRtpScriptTransform in the callback of the

streamsChanged

signal

of our GstWebRTCAPI instance:

import { attachRtpScriptTransform } from "nal-extractor";

// ...

session.addEventListener("streamsChanged", () => {

const streams = session.streams;

if (streams.length > 0) {

{

// handle streams

// ...

}

// attach streams to

for (const transceiver of session.rtcPeerConnection.getTransceivers()) {

const options: [any, Transferable[]] = [

{ mid: transceiver.mid },

[],

];

attachRtpScriptTransform(transceiver.receiver, SEIWorker, { options });

}

} else {

console.log("no streams");

}

});

// ...

Further considerations

It makes of course very much sense to use common formats to serialize the actual

payload into the data member of the SEIMessage using common binary formats

like messagepack. At the Rust side, serde is your friend.